Security is a crucial aspect of the artificial intelligence (AI) trend, both as an opportunity and a concern. AI-powered cybersecurity products are becoming more common, while another aspect of the AI-security intersection is the vulnerability of AI against abuse and misuse. This new generation of technology opens up a field of new products for vendors and customers, notes Scott Crawford, research director, security, and Mark Ehr, senior consulting analyst, information security, at 451 Research, a part of S&P Global Market Intelligence.

AI for security

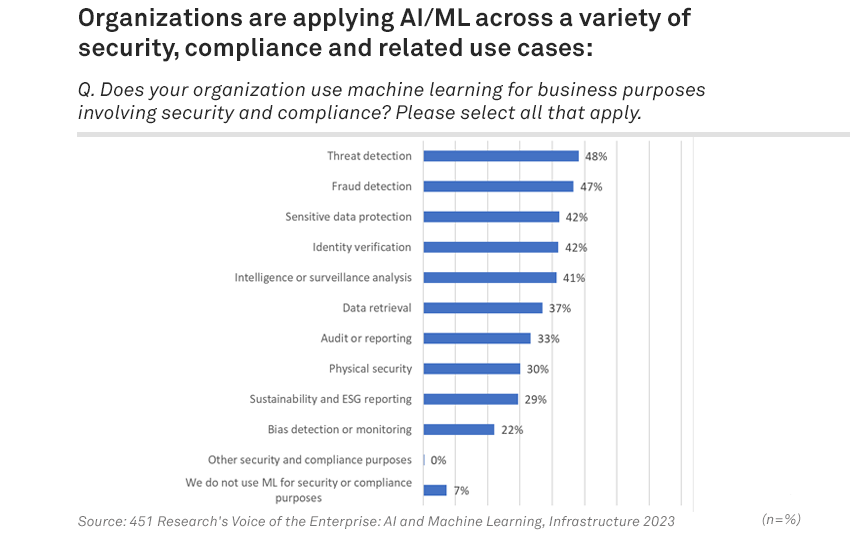

Machine learning has been used in security for years, particularly in areas like malware recognition. The vast number of malware variations requires scalable and responsive approaches, leading to the rise of user and entity behavior analytics. Supervised and unsupervised machine learning has been employed to refine security analytics by identifying patterns and anomalies.

Generative AI has introduced new possibilities in security operations (SecOps), allowing for the analysis of large volumes of data and presenting valuable insights to alleviate the burden on security analysts. This technology can also assist less-experienced analysts in building expertise, addressing the cybersecurity skills shortage.

Various vendors are promoting AI applications in security, indicating an expanding field of innovation and competition in this domain. OpenAI’s partner Microsoft Corp. introduced its Microsoft Security Copilot earlier this year, while other offerings in the market include Google Cloud Security AI Workbench and Amazon Web Services’ alignment of Amazon Bedrock with its Global Security Initiative.

Security for AI

Security concerns regarding AI touch on vulnerabilities that can arise from the underlying software, the potential misuse or abuse of AI functionality, and the possibility of adversaries leveraging AI for new types of exploits.

The cybersecurity products and services market has already begun addressing this issue, with participation from startups and major vendors. Practitioners are conducting research on threats targeting AI, aside from developing methods to detect and defend against malicious activity. During DEF CON 2023, the Generative Red Team Challenge tested AI models provided by various companies, such as NVIDIA Corp., Anthropic, Cohere, Google and Hugging Face. Existing approaches like MITRE’s Adversarial Tactics, Techniques, and Common Knowledge (ATT&CK) knowledgebase, which improves detection and response technologies, are being extended to AI through the Adversarial Threat Landscape for Artificial Intelligence Systems initiative.

Efforts such as the AI Vulnerability Database aim to catalog AI model, dataset and system failures. In addition, techniques used to secure the software supply chain are being applied to AI-specific challenges.

Want insights on Infosec trends delivered to your inbox? Join the 451 Alliance.