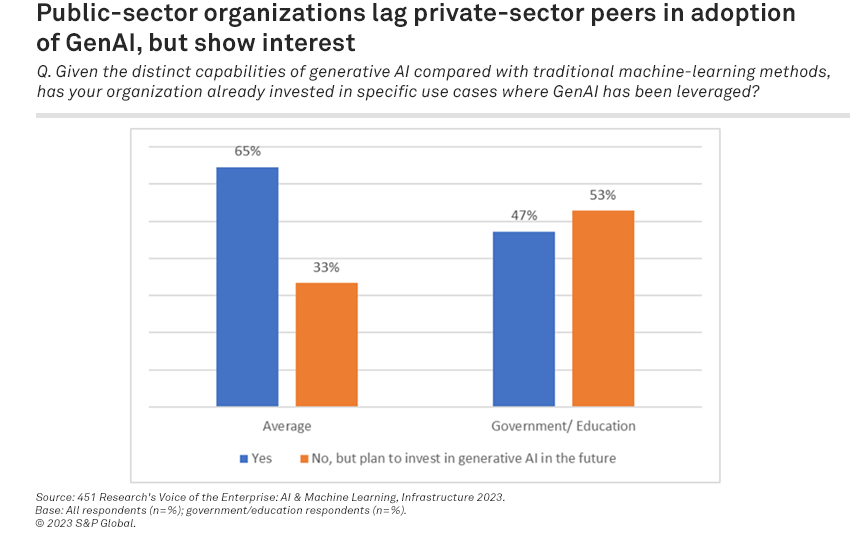

Like their private-sector industry peers, governments and other public-sector organizations are interested in the benefits associated with the latest generative AI (GenAI) tools. US government agencies are roughly divided into three main camps: early adopters, wait-and-see pragmatists, and skeptics.

Early adopters have outlined guidelines for employee use and started to deploy publicly available tools for some emerging use cases. The majority of governmental organizations are in wait-and-see mode, determining best practices for if and when to use tools, which are relatively expensive for city and government deployment when it comes to a city- or department-level subscription.

The last camp, skeptics, includes cities and states that have outright banned the use of tools in their localities, citing fears around cybersecurity and privacy. New York City’s Education Department banned ChatGPT deployment in schools in January, before reversing the decision in May. In Maine, government employees were barred from using ChatGPT for six months as the state outlined guidance around usage.

The Take

As with any new technology that emerges — from cloud to the internet of things — the public sector will have a unique adoption curve compared with industry peers. The opportunity for generative AI in the government space is automating processes around content summarization and generation, making more and better data available for citizens, and generally freeing up employee time and resources. There is an appetite in local, city and state agencies to deploy these tools as long as vendors can ensure that data security and privacy, as well as concerns about runaway costs associated with the use of generative AI applications, will be addressed.

Policy overview

Ten states have passed regulations related to artificial intelligence set to go into effect this year, according to the nonprofit Electronic Privacy Information Center. Fewer have outlined policies around generative AI, although New York, Pennsylvania and Rhode Island are among the states with bills designed to hold generative AI models to certain standards to limit bias and disclose when AI is used to create “synthetic media.” In September alone, Kansas and California announced new statewide generative AI guidelines.

- California Executive Order N-12-23. Citing the state’s geographic dominance in generative AI vendors and talent, Governor Gavin Newsom released an executive order on state agencies’ use of the new technology. Newsom ordered state departments to conduct a risk assessment of beneficial GenAI tools, with a focus on potentially high-risk use cases. The order also directs the state’s cybersecurity center, threat assessment division and department of technology, among others, to conduct risk analysis related to threats and vulnerabilities of the state’s critical energy infrastructure. By March 2024, California plans to have infrastructure ready to conduct GenAI pilots, namely around improving citizen experience with government services and supporting state employees.

- Kansas generative AI guidelines. Kansas’ Office of Information and Technology Services outlines policy for all business use cases in the state. The policy specifically governs the use of GenAI in the development of code, written documents, research, summarizations and other business decisions as necessary. The state’s OITS requires any outputs produced from GenAI to be reviewed by human operators for accuracy and privacy before being disseminated, and to not be solely relied on when making decisions. Data that is not released publicly cannot be entered into generative AI applications, according to the guidelines.

Use cases

Deployments are still emerging for public-sector generative AI applications, with many cities and states interested in the benefits of the technology, but cautious about the security and privacy of the data they have to put in for output. Use cases are altogether not dissimilar from those emerging in the private sector, although government agencies have more guidance around disclosures, as well as protecting private or secure city/citizen data. Some vendors are positioning their GenAI applications for government as “boring” AI that can help governments do more with less.

With regard to individual productivity, these tools can free up time and resources by taking a first pass at memo writing, or automating the retrieval of FAQ content for citizens. It is worth noting that for most — if not all — deployments, the output must be verified by a human, and disclosure around AI use must be made. However, it is unclear if cities will eventually look to build out their own local-specific language models due to cost, time and energy consumption restraints. For now, the following have emerged as use cases:

- Chatbots and virtual assistants. These applications leverage natural language processing to respond to citizen inquiries based on proprietary training data. Common data includes FAQ documents, transit schedules, city information and services data, and other web-based data. While many of these chatbots were initially rules-based and unable to respond beyond simple questions, governments are turning to AI-powered and generative AI chatbots to free up city employees’ time and reduce time spent on calls. VIA Metropolitan Transit, which serves San Antonio, Texas, uses an IBM watsonx-powered chatbot to provide up-to-date transit information for riders. Beyond operating 24/7, the chatbot leverages call-center data and collects real-time transit data via APIs, to give riders information on where their next bus is.

- Code interpretation and data analysis. Depending on the privacy requirements of the dataset, cities can deploy code interpretation to make sense of large datasets. Code interpreters can create summary analytics and data visualizations, and detect patterns. In Boston, officials noticed an uptick to 311 graffiti-related reports. The city translated the data into HTML format, which it then uploaded to Folium, a python library for interactive maps, to create a heatmap of incidents. The potential for cities to deploy their existing open data for use cases like heatmapping is emerging with lots of untapped potential. Bard and ChatGPT both provide code interpretation services.

- Intelligent document recognition and processing. IDP can include report summarization, content generation and translation services, among others. It leverages natural language processing, pattern recognition and data extraction algorithms to generate structured data out of structured or unstructured input. The city of Boston deployed IDP on community summer brochures to break out activity by age range and activity type. Additional use cases include writing job descriptions or first drafts of memos. Microsoft Corp., AWS and Oracle Corp. offer IDP, the former two with specific tools for government customers.

Want insights on IoT trends delivered to your inbox? Join the 451 Alliance.