As we begin 2020, we inevitably look forward to what the new year will bring. But the dawning of a new decade also prompts us to reflect on the profound impact that technology has had on society over the last 10 years.

From the smartphone and social media to the connected home (and car) and the rise of the cloud, e-commerce and digital payments – to name but a few developments – tech has not just become a part of modern life; it has become an integral enabler of modern life, at home, at work and at play.

And while this certainly comes with some profound challenges and concerns – the optimism of tech as a universal ‘force for good,’ such as the role it played in the Arab Spring of 2011, has been replaced by an altogether more pessimistic tone – there is also little doubt that the next decade will usher in more sweeping change as technology becomes increasingly pervasive, contextual, intelligent and automated.

We have tapped into the 451 analyst ‘hive mind’ to present highlights of the issues, trends and innovations that we expect to drive the tech industry narrative over the next year and beyond as we begin a new digital decade.

The 451 Take

The last decade brought some fundamental changes to the nature and role of enterprise IT. ‘Digital Transformation’ may already be something of a cliché, but it reflects the new reality that an organization’s ability to embrace and exploit technology – while also minimizing risk – is becoming a fundamental determinant of its success. As ever, these opportunities have to be balanced against a substantial roster of challenges, not to mention a background of evolving and often uncertain economic and political circumstances. As we kick off the new year – and a new decade – the 451 Research analyst team is committed to providing our clients with the data and insight required to help navigate these challenges and opportunities. We look forward to working with you.

1. 2020 is the Year Enterprises will get Serious about AI Adoption

We begin our predictions with artificial intelligence.

Overhyped and overexposed it may be, but AI and machine learning (ML) consistently rank as top areas of new investment by enterprises in our research. The reasons are clear: new approaches to data processing, data management and analytics not only are key to differentiating the most data-driven companies from the least data-driven, but are also fundamental to the ability to execute on digital transformation strategies. And undoubtedly there is already substance to the hype; AI is experiencing some significant, sustained adoption in the enterprise setting.

But as adopters move from experimentation through proof-of-concept to full deployment, they inevitably face an oft-overlooked challenge: the large and mutable nature of AI workloads. Those who have adopted, or are looking to adopt, AI will need to reevaluate their current infrastructure to accommodate the demands of AI workloads.

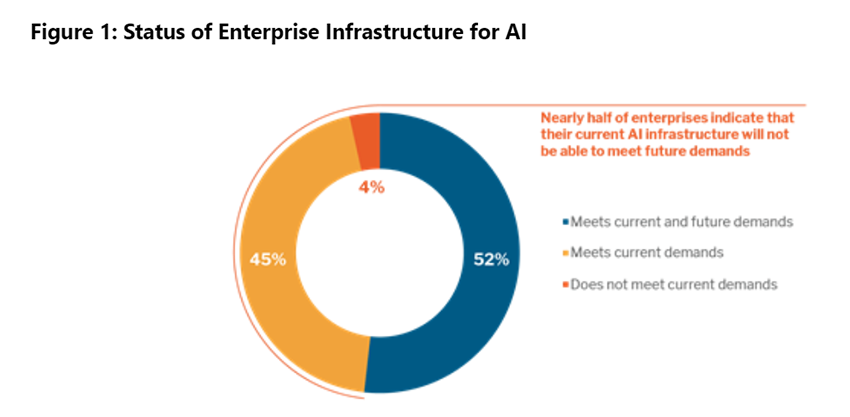

According to the 451 Alliance, nearly half of all enterprises indicate that their current AI infrastructure will not be able to meet future demands. This insufficiency can occur at any step in the ML process – data ingestion and preparation, model training or inferencing.

Additionally, as adoption of machine learning grows, enterprises will need to formalize procedures around ML operationalization (MLOps). At present, many organizations likely don’t have enough models for model management to be an obvious problem.

However, the challenges of operationalizing the technology expand exponentially with each additional model. As with any software, AI applications need to be monitored. But what also needs to be monitored are the predictions they make. Unlike rules-based systems, these models learn and adapt, so the predictions they make when they are initially deployed are likely to be different from the ones they make after they have been deployed for some time.

We expect to see continued evolution of the ecosystem to support these and other emerging requirements through 2020 and beyond.

2. A Multibillion-dollar Opportunity Emerges in the Data-driven Experience Economy

Significant disruption across industries and the rising influence of the empowered consumer continue to exert pressure on businesses to deliver differentiated and consistent experiences across the entirety of the consumer journey.

Simultaneously, business models are shifting, with the increasing prevalence of the subscription economy changing the long-term economics and relationships between brands, retailers and consumers, demanding a new approach to engagement models that emphasize loyalty-building and retention.In effect, this is forcing the evolution of the entire technology stack and organizational culture in order to enable real-time, contextually relevant experiences.

As a result, data remains a core battleground for creating unified customer experiences. Businesses need to not only capture and unify disparate sources of consumer data but effectively contextualize and operationalize information to push critical insights across channels and differing organizational stakeholder groups with a hand in shaping the customer journey.

The missed revenue opportunities that US B2C businesses face are significant (see figure below). Putting digital tools to work in a transformative way ensures that data, insights and key technologies connect people with information and processes, leading to a better experience for customers and, ultimately, business growth.

3. Tech Firms Further Encroach on the Banking Sector with a Strong Customer Experience Focus

Financial services have become part of the ‘stack’ for technology companies as they’ve looked to increase stickiness with users and glean valuable data insights. F

or instance, 2019 saw Apple launch a credit card, Google’s collaboration with Citi and Stanford Federal Credit Union launch a checking account, and Uber unveil a financial services suite dubbed Uber Money. We believe these are just the beginnings of the tech industry’s deeper push into financial services, with the goal of owning a greater variety of customers’ financial interactions.

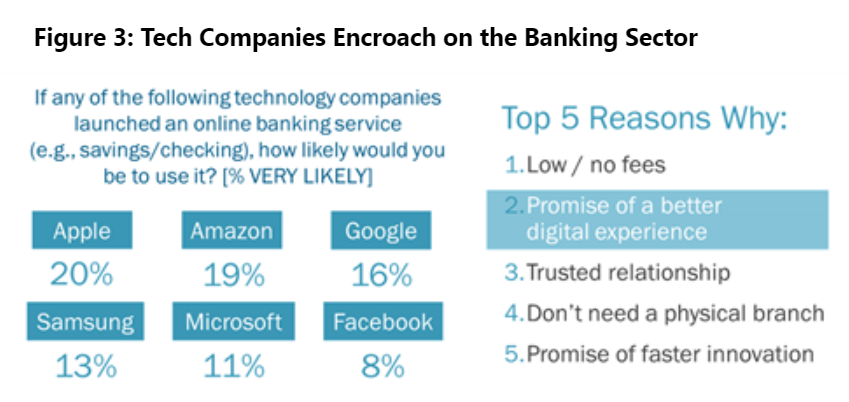

Our consumer data shows latent potential, with 20% of consumers stating they would be ‘very likely’ to use an online banking service from Apple if it were available, while 19% state the same for Amazon and 16% for Google. When we probe the top reasons why these consumers are willing to bank with tech providers, the ‘promise of a better digital experience’ ranks as the number-two factor. This is a battleground where traditional financial institutions will be challenged to compete given the deep developer resources and design expertise of Silicon Valley companies.

Indeed, while most tech companies have no aspirations to become regulated financial institutions, they are eager to take ownership of the customer experience. This poses a relationship disintermediation threat to banks, not to mention the risk of deposit displacement, interchange revenue erosion, and greater challenges increasing product (e.g., investment/trading) penetration rates.

4. Diversity in the IT department: A Decade of Change Must Start Now

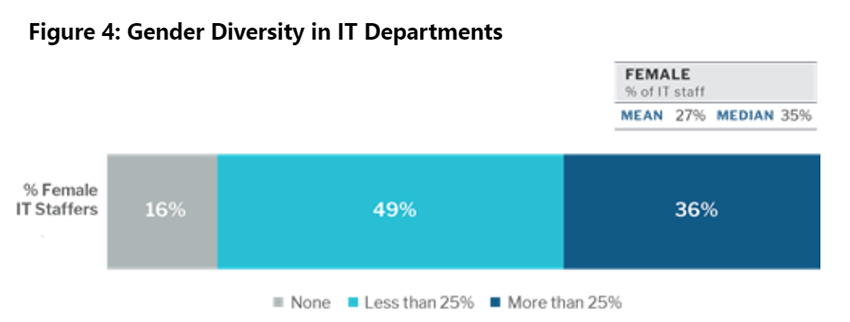

That the typical IT department is still overwhelmingly male is no surprise. Half of respondents in the 451 Alliance said women accounted for less than a quarter of their company’s IT staff. Even worse, 16% of respondents said there were no women at all working in their company’s IT department (see figure below).

It’s clear that all organizations (governments included) need to do more to promote IT workforce diversity in all of its forms – not just because it’s the right thing to do, but because it’s good for business. Our research suggests, for example, that organizations with a greater female representation in the IT department tend to view IT as more strategic, and tend to be further along with their digital transformation initiatives. And with the technology skills shortage (see Trend 5 below) only forecasted to increase, successful organizations will have figured out that their IT workforce needs to better mirror the diversity of society at large, or risk losing out.

In 2019 we saw some of the big tech vendors make strong commitments, perhaps belatedly, to improving the diversity of their workforces – Dell Technologies, for example, says women will account for half of its workforce (up from 30% today), and 40% of managers, by 2030.

As environmental, social and governance (ESG) issues continue to feature more heavily in strategic planning and procurement, we expect to see more signs of progress here through 2020 and beyond.

5. Navigating Access to Talent will be a Key Step to Successful Transformation

Experienced IT leaders are no stranger to skills shortages – in an industry characterized by continuous innovation, it comes with the territory. Yet the scale of the issue is growing; it’s both chronic and, in some areas, acute. Cloud-based skills are in particularly short supply – no great surprise since almost every organization is looking to re-platform multiple services to a range of public and hybrid clouds, as well as natively deploy new workloads to the cloud. Yet cloud skills are not the only ones in short supply; a lack of expertise in security, AI/ML and data science/analytics also features heavily in our research.

Faced with these shortages, the challenge for enterprises is how to avoid this becoming a roadblock to effective transformation. For some, we expect the answer to come in the form of managed service providers, particularly around cloud.

For many organizations, it is emerging as a core basis for engagement with managed services firms, and an organizing principle in how these services are being developed and offered. Managed service providers will need to design their offerings to address the complexities of cloud via the individual skill sets most lacking in the enterprise. They will also have to build those services to meet the core requirements for managed services themselves – primarily that they improve the cost-effectiveness and performance of cloud services in use.

Having built services around specialized skill sets, successful service providers will bring those to market with an awareness of their place in the larger services ecosystem – partnering where appropriate to address the broader set of skills gaps.

6. Embracing Complexity, and Legacy Mission-Critical Apps

Rather than the simple ‘plug in and consume’ electricity analogy mooted a decade ago, enterprises are using hybrid cloud, cloud-native frameworks and innovative services like AI, serverless and the Internet of Things (IoT) higher up the stack to build their applications.

These things are rarely simple, but enterprises are choosing complexity because it delivers value in the form of differentiated offerings, more efficient applications, happier customers and lower costs. Our Cloud Price Index now tracks a colossal 1.4 million SKUs for sale from AWS, Google, Microsoft, Alibaba and IBM.The solution – and the path to enterprise value – lies in the pursuit of ‘optimization’ rather than ‘resolution.’

What is the difference?

Resolution suggests the dumbing down or simplification of complexity, but this can result in losing much of the value that complexity has created. Optimization means the complexity remains but is managed. Crucially, this optimization can’t just be driven by tools. Tools provide data that can be employed to optimize, but expertise is still required. Yes, analytic tools can recommend when a resource is underused, and automation can buy a better-sized instance when this is detected.

Meanwhile, the emergence of the public cloud as a viable and often preferred venue for running enterprise applications has been one of the most notable trends of the last decade.

Our data has consistently shown that while most organizations will continue to leverage multiple environments, the dominance of on-premises IT as the primary workload execution venue will diminish over the next few years in favor of off-premises environments.

Yet many firms still regard the public cloud as a bridge too far for their most critical applications; the importance of these applications – often the ‘crown jewels’ of the business (large, old, with multiple dependencies) – means the risk and cost associated with moving them is too steep. For some regulated firms, moving them ‘off-prem’ to a third-party cloud is simply not allowed. But these applications can’t be entirely left behind as the rest of the IT estate moves to cloud, so what gives?

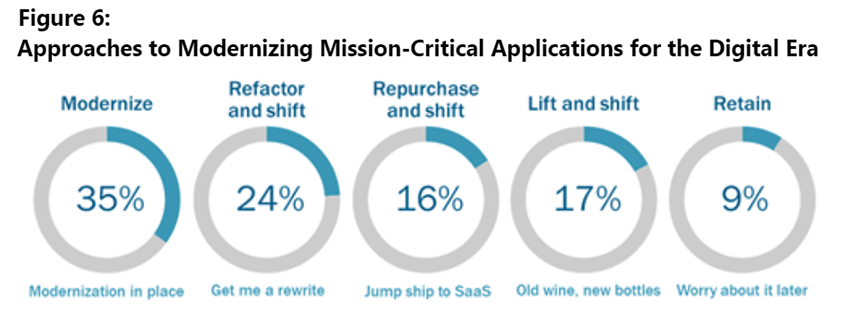

‘Modernization in place’ remains the single-most-common approach to upgrading mission-critical legacy applications. However, as cloud innovation continues, we expect to see alternatives increasingly come into play; the ability to ‘refactor and shift’ legacy applications is gaining momentum as organizations look to leverage the hyperscalers’ tools and functionality for cloud-forward application modernization. And, having established an off-prem beachhead, the hyperscalers will begin moving on-prem more aggressively in 2020 with their various ‘cloud to ground’ initiatives, most notably AWS with Outposts.

The future of cloud for most organizations may be hybrid, but IT suppliers that expect to continue selling gear into on-premises datacenters should position automation, visibility and professional/managed services alongside the hardware to help the legacy application-modernization process along – before the hyperscalers get there first.

7. Edge Computing Gains Some Definition

Three years ago, the idea that analytics for IoT would be done someplace other than the cloud was a novelty, denoted with the ‘edge computing’ moniker.

In the intervening years, the concept of edge computing has matured into a continuum of locations depending on the context of the application or vendor platform. This ranges from sensors and embedded computing at the point of IoT data origination to the ‘first landing’ location of data such as IoT gateways, network operator infrastructure and third-party datacenters.

The motivation for best execution venue varies depending on industry and application; however, the key drivers for workload placement at the edge for respondents in 451 Alliance’s IoT study were to improve security (55%), speed data analysis (44%), reduce volume of data transmission (35%) and lower storage costs (39%).

Examples of edge and near-edge applications are advanced driver-assistance systems and vehicle-to-vehicle in transportation, and connected worker and production monitoring in manufacturing.

Meanwhile, edge is shifting from a vague prediction to a driver of datacenter demand, and we expect to see the first real evidence of smaller-scale datacenter deployments emerge this year. Much of the focus for edge computing remains on IoT capabilities, whether it be for inventory monitoring, intelligent logistics, fleet tracking, remote connectivity or smart-city deployments. Constraints across the network are also driving edge conversations for media, content and cloud.

Edge computing deployments will increase in 2020 and beyond as standards efforts begin to bear fruit, and as manufacturers release manageability, security and provisioning tools for the plethora of distributed edge devices.

The distributed data management problem presented by applications and machine data being executed at the edge, near the edge and in the cloud will need to be reconciled into role-based views on the enterprise data estate, as well as integrated across all these devices for insights that require context from multiple data sources. Manufacturers such as Hitachi and Fujitsu have already begun this reconciliation of multiple data sources from the edge to the cloud.

8. Tech’s ‘Millennials’ Set to Make their Mark in M&A

Just as the IT market has transitioned through distinct eras, the tech M&A market is heading into a new decade in the midst of a generational shift.

The tech industry’s household names, which have dominated M&A since the first print in the industry, are no longer dealing like they used to. In place of the Baby Boom-era buyers, the tech industry’s Millennials look set to make their mark in the 2020s.The ‘out with the old and in with the new’ disruption shows up most clearly at the top end of the M&A market. In 2019 – as the curtain came down on the decade that had been dominated by big-cap tech acquirers – Oracle, Microsoft, IBM and SAP, collectively, did not put up a single billion-dollar print.

Up until last year’s notable absence, our data shows that the well-established quartet had been averaging three or four acquisitions valued at more than $1bn each year. Instead of the ‘usual suspects’ in M&A, the new market makers are tech companies born in the past two decades or so – companies such as Salesforce.com, Splunk and Workday.

9. Cloud-native and Hyperscale Approaches Continue to Drive ‘Invisible’ Infrastructure

We predicted a year ago that cloud-native approaches to application development – utilizing technologies such as containers, microservices, Kubernetes and serverless – would gain strong momentum, and that has proved to be so, particularly with regard to microservices adoption.

Enterprises can go faster with cloud and be more efficient with microservices, which is why 49% of enterprises rated microservices as their most important cloud-native technology in our 451 Alliance DevOps study.

However, as deployments scale up and complexity grows (meaning more than one Kubernetes cluster/application/team), a service mesh will be required to provide communications between all of the moving parts.

What’s next?

It is new territory, but in 2020, we will see the developer piece (distributed functions, modularization, multiple programming languages, IDEs) start to come together with the operational piece (making sure stuff works). With the breadth of services available, the key to success will be finding the right combinations and operationalizing services to deliver the benefits as advertised by their suppliers – speed, scale and agility. Service mesh is crucial to this.

Meanwhile, hardware design is evolving rapidly once again to keep up with the changing demands of emerging workloads. New processors, memory, storage and networking technologies are combining to build the next generation of ‘invisible’ infrastructure. The hyperscalers were the first to turn to custom silicon as an alternative to general-purpose CPUs. They did so because they were approaching a cost wall while planning the infrastructure requirements that would be needed to run compute-intensive machine learning and AI workloads (such as language translation apps and image processing).

In 2020 this trend will be in evidence well beyond the hyperscalers as it is applied to second- and third-tier cloud service providers and enterprise datacenter private clouds. Large servers stuffed with GPUs (and soon specialist AI processors) are already in widespread deployment – the equivalent of the powerful developer workstations of the past.

And, of course, there are implications elsewhere in the system design: smart NICs and RDMA (direct memory access) networking stacks to handle the huge increase in server-to-server east-west traffic; end-to-end NVMe storage, NVMe over fabrics, and associated software such as the storage performance development kit; and simplified programming models so that developers can code once across multiple chip architectures – CPU, GPU, FPGAs or specialist AI accelerators.

Future infrastructure platforms for cloud-native workloads, whether offered by hyperscalers or enterprise systems companies, will see the closer integration of scalar, vector, matrix and spatial compute resources with storage and high-speed communications, using a unified programming model.

10. New ‘Workspaces’ are Desired by Users, but Add Confusion to Market

One of the driving forces behind the evolution of productivity software is the need to address the growing sprawl of applications that’s happening within most companies. Other drivers are context switching; silos of people, information and workflows; and the workforce’s disengagement with their day-to-day tooling – all of which are highly attritional to employees’ morale and productivity.

A recent 451 Alliance Workforce Productivity & Collaboration study found that only one-third of employees are ‘very satisfied’ with the mix of tooling they must use to get their work done. Respondents indicated that having to use too many apps is the biggest overall work pain point, and 40% said they believe the number of apps they have to use will increase over the next year.To combat this, a growing number of vendors in traditional segments such as content management, project management, big productivity suites and intranets are creating new types of converged workspace experiences.

This market is in early days and hasn’t crystallized into a single, defined category yet (and may never do so); vendors are putting their stakes in the ground, variously calling their offerings workspaces, work platforms and work systems. This is definitely a positive direction, but confusion around the naming and capabilities workspace products is coming along for the ride.

11. Security will Wake Up to the True Scale of Exposure to Third-Party Risks

Recently we have seen increased emphasis on technologies designed to protect organizations against the risks arising from third parties. Software composition analysis, for example, enables organizations to mitigate their exposure to security vulnerabilities in open source software – not a trivial matter considering the range of functionality enabled by open source and its contribution to a host of development efforts. Meanwhile, an entire segment of vendors has grown up around the assessment of digital risks in technology suppliers, helping those suppliers and their customers to become more aware of exposures and ways to mitigate them effectively before they introduce issues beyond the scope that the customer alone can contain.We believe this is only the beginning of a much larger trend.

Organizations are becoming deeply dependent on the many third-party services and capabilities embedded into the digital landscape, which greatly increases the level of risk exposure. This concern goes well beyond specific domains such as cloud infrastructure or application security. When considering the full range of third-party services on which enterprises rely – e.g., customer resource management, payments processing, supply chain integration and human resources, as well as the integrated communications, workflow and collaboration systems that underpin transformational drivers such as DevOps – the true scale and criticality of the problem begin to emerge.

Addressing third-party risk means a vast scope of visibility, a widened aperture for threat intelligence, greater responsibility for privacy and compliance, and a need for more responsive threat recognition and containment on multiple fronts. It will also require intelligent automation and response to avoid overwhelming security organizations with the sheer scale of increased demand – which, in turn, will introduce new opportunities for service providers as well.

It also introduces another factor likely to shape security in the near future: the impact of industries, such as insurance, focused on mitigating business risk beyond the narrow confines of IT or information security.

12. A Leap Year ahead for Quantum Computing?

The year ahead will see some initial real-world adoption for one of the ‘buzzier’ areas where science and technology have collided: quantum computing.

While it’s still very early on, hyperscalers and major technology companies have planted flags around their quantum capabilities. IBM and Google have been building superconducting systems, with the latter declaring it has achieved the ‘quantum supremacy’ milestone, which others are hotly debating. Microsoft has built its own quantum computer, as well as a development environment, and it and AWS are creating their own marketplaces, providing access to partners such as D-Wave, Honeywell, IonQ and Rigetti. And in a recent 451 Alliance study, 15% of respondents said they were evaluating the potential for quantum computing.

The problems that this noisy, intermediate-scale quantum (NISQ)-level technology could solve are still simple but nonetheless important – chemical interactions of a small number of atoms and electron behavior in semiconductors, for example.

Important advances are occurring, such as the means to reduce error rates and increase coherence times for qubits (quantum bits) and scale the interconnection capacity between them to increase the effective computational power, referred to as ‘quantum volume.’

Development environments are maturing, and algorithms are improving their utility. With these improvements, quantum computers will be able take the first steps to become accelerators to classical computers, but stand-alone systems will have to wait until later in the decade.

Want to join the IT elite?

Apply for free membership in the 451 Alliance, the premier IT think tank. Do I qualify?