Source: .shock/Storage/Adobe Stock

The landscape of artificial intelligence (AI) infrastructure is rapidly evolving, driven by the increasing demand for AI and machine learning (ML) technologies. As organizations strive to keep up with technological advancements, it’s important to understand the current trends and challenges in AI infrastructure.

Understanding AI infrastructure

AI infrastructure is more than just a collection of graphics processing units (GPUs). It encompasses a range of hardware and software components designed to support AI and ML applications. These components often include GPUs, custom silicon, and other accelerators to manage compute-intensive workloads. Additionally, AI infrastructure integrates with software products that orchestrate and manage the hardware, ensuring seamless functionality.

Organizations can access AI infrastructure through various models, including capital expenditure and operating expense models, with options for traditional purchases and cloud-based subscriptions. This flexibility allows organizations to choose the best approach for their unique needs and budget constraints.

Current trends in AI infrastructure

The demand for AI infrastructure has surged as organizations and consumers increasingly adopt AI and ML technologies. This has led to the influx of new technologies and vendors across compute, storage, networking, cloud, and edge spaces. Organizations are now tasked with navigating this complex ecosystem to find solutions that best fit their requirements.

Key trends shaping the AI infrastructure landscape include:

- Increased reliance on cloud-based solutions: Organizations are showing a preference for cloud environments over on-premises solutions. This shift is driven by the cost-effectiveness and scalability of cloud-based accelerators, which allow businesses to avoid significant upfront investments and procurement challenges associated with on-premises infrastructure.

- Integration with AI/ML frameworks: AI infrastructure is increasingly being integrated with popular AI/ML libraries and frameworks such as PyTorch and TensorFlow. This integration streamlines the development and deployment of AI applications, enabling organizations to leverage existing resources and expertise.

- Focus on performance optimization: To meet the growing demands of AI workloads, organizations are prioritizing improvements in storage capacity, memory capacity, and networking. Edge servers and devices are also being utilized to accelerate ML workloads, ensuring low latency and efficient data processing close to the end user (See Figure 1).

Challenges in managing AI workloads

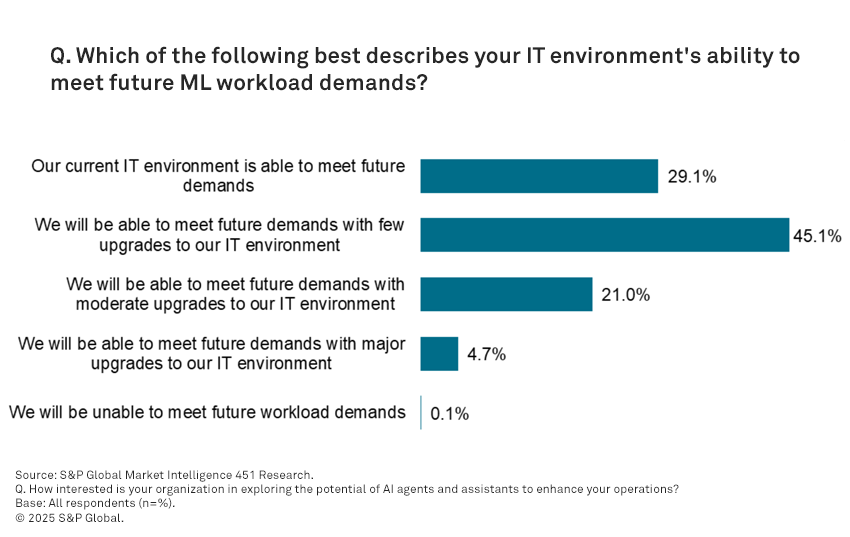

Despite advancements, many organizations feel inadequately prepared to handle future AI and ML workload demands. According to a survey conducted by 451 Research, a part of S&P Global Market Intelligence, over 70% of respondents reported their organization was unprepared in terms of their current IT infrastructure.

This highlights the need for strategic investments in AI infrastructure to address performance bottlenecks and ensure readiness for future demands.

Common challenges include:

- Limited access to dedicated hardware: The availability of GPUs and other accelerators remains a significant challenge for many organizations. This limitation hinders their ability to fully capitalize on AI opportunities.

- Complexity of integration: Integrating AI infrastructure with existing systems and processes can be complex and time-consuming. Organizations must navigate compatibility issues and ensure seamless operation across diverse environments.

The path forward

Some key areas of interest to consider in the AI infrastructure space include:

- Impact of open-source models: The availability of low-cost open-source models, such as those from DeepSeek, may influence infrastructure and data center demand.

- Budget considerations and geopolitical factors: Organizations will need to balance their AI infrastructure investments with budget constraints and geopolitical uncertainties, ensuring that their strategies align with broader business objectives.

By understanding the current trends and challenges in AI infrastructure, organizations can position their organizations for success in the rapidly evolving AI landscape.

This content may be AI-assisted and is composed, reviewed, edited and approved by S&P Global in accordance with our Terms of Service.