Source: Mongkol Chuewong/Moment/Getty images.

As organizations shift compute closer to data sources, edge AI is gaining traction as a practical architecture for distributed intelligence, with inferencing workloads increasingly deployed across constrained environments, from cloud-provider edge platforms to embedded system-on-chip devices. According to a study conducted by S&P Global Market Intelligence 451 Research focused on edge & infrastructure services, more specifically around AI and inferencing, 49% of organizations cited using lightweight or moderately complex models at the edge, underscoring the need to balance capability with resource constraints. Inferencing tasks lead at the edge, but training and fine-tuning are also gaining traction, suggesting a shift toward more autonomous, context-aware systems. Hardware and software choices reflect this pragmatism: Open-source frameworks and commercial AI tools coexist, reflecting a pragmatic blend of flexibility and scale. The data points to a maturing edge AI landscape shaped less by hype and more by architectural realism and operational fit.

The Take

Vendors targeting the edge AI and inferencing market must design for constraint, not excess. The data shows a clear preference for lightweight models, system-on-chip (SoC) hardware and open-source inferencing engines — choices driven by operational realities, not theoretical performance.

With 49% of organizations deploying moderate or lightweight models and only 7% using large ones, the market is signaling a need for efficiency, portability and integration readiness.

Cloud-provider edge platforms dominate deployment, but private and on-premises options remain relevant, suggesting a hybrid future. Security, compliance and system integration are persistent barriers, meaning vendors must prioritize interoperability and risk mitigation over novelty. Support for fine-tuning and training at the edge is growing, indicating demand for adaptive, context-aware solutions. Success in this space requires flexibly sized, resource-aware offerings that align with organizations’ priorities. Vendors that ignore these constraints risk building for a market that doesn’t exist.

Summary of findings

Efficiency and compliance are the top drivers for edge AI deployment. Operational efficiency and safety (46%) and data security or compliance (43%) are the leading motivations for deploying AI at the edge. These priorities outpace other drivers such as cost (34%), scalability (31%) and latency (18%), indicating that organizations are primarily focused on reliability and regulatory alignment. The emphasis on safety and compliance suggests edge AI is positioned as a risk-mitigating technology rather than purely a performance enhancer.

Integration and security remain persistent deployment barriers. Integration with existing systems (37%) and security vulnerabilities (35%) are the most cited challenges in deploying edge AI. Skills gaps (32%) and model complexity (28%) also present significant hurdles, suggesting that technical and organizational readiness are key limiting factors. These inhibitors point to the need for better tooling, training and architectural alignment to scale edge AI effectively.

Commercial AI tools lead, but internal and custom approaches remain strong. Commercially available AI tools, such as public large language models, are used by 64% of organizations, making them the most common format for AI adoption. Meanwhile, 40% use internally implemented commercial tools and 40% build custom AI applications in-house. This mix reflects a balance between convenience and control, with many organizations opting for hybrid strategies that combine external capabilities with internal customization.

Model complexity is calibrated to edge constraints. Nearly half (49%) of organizations deploy a mix of lightweight and moderate-complexity models at the edge, while only 7% use large or advanced models. This reflects a pragmatic approach to balancing performance with hardware limitations, especially in distributed or resource-constrained environments. The low adoption of complex models underscores the need for optimization and efficiency in edge inferencing workloads.

Inferencing is the dominant edge AI workload — but training is not far behind. Running pre-trained models (inferencing) is performed or planned for by 47% of organizations, making it the most common edge AI function. Surprisingly, 46% also report training models from scratch at the edge, and 52% are fine-tuning models locally. This indicates that edge environments are evolving beyond simple inferencing to support more dynamic and adaptive AI workflows.

Cloud platforms dominate edge AI deployment locations. Nearly 43% of organizations run or plan to run edge AI workloads via cloud-provider edge platforms, making it the most common deployment venue. Private edge clouds (34%) and on-premises edge servers (31%) follow closely, while telecom 5G/multi-access edge computing services (19%) and edge devices themselves (19%) remain less utilized. This suggests that while edge AI is meant to decentralize compute, many organizations still rely on cloud-based infrastructure to manage and scale these workloads.

SoC platforms are the preferred inferencing hardware. About42% of respondents use SoC platforms such as NVIDIA Jetson and Qualcomm Snapdragon for edge AI inferencing, ahead of standard central processing units (36%) and dedicated AI accelerators (26%). Microcontrollers with embedded machine learning (26%) and ruggedized industrial systems (22%) also show meaningful adoption. This reflects a strong preference for compact, power-efficient hardware that can support AI workloads in constrained or embedded environments.

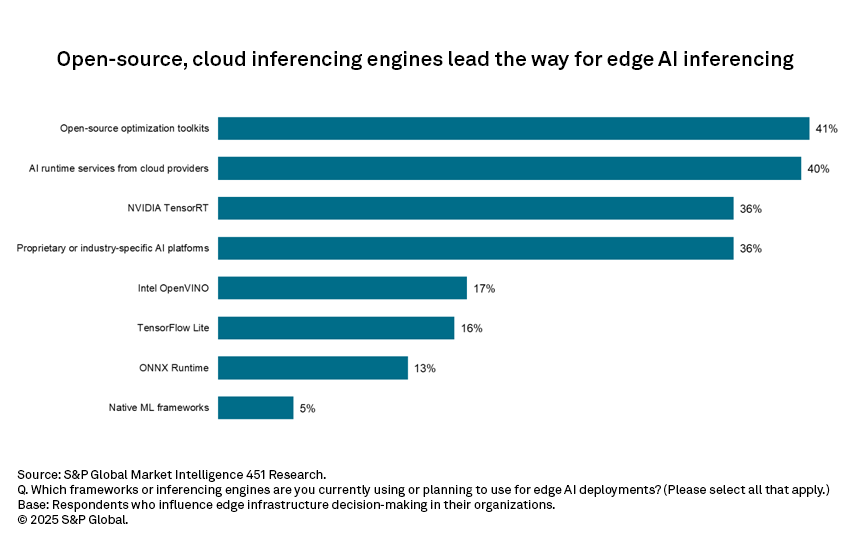

Open-source frameworks lead inferencing engine choices. Open-source optimization toolkits (41%) and cloud-provider runtime services (40%) are the most commonly used inferencing engines for edge AI. NVIDIA TensorRT and proprietary platforms are used by 36% each, while Intel OpenVINO (17%) and TensorFlow Lite (16%) trail behind. The data indicates a clear tilt toward flexible, community-driven tools that can be adapted across diverse hardware and deployment scenarios.

Want insights on AI trends delivered to your inbox? Join the 451 Alliance.